Image difference detection

20 Jan 2014 - Chris Brown

Tracking significant changes in an environment can be formulated as a problem in measuring differences between snapshots of that environment. This is a basic classification problem, a simple threshold fitting or nearest neighbors task, in the simplest form.

With the Raspberry Pi and Pi camera, there are additional challenges.

The noise produced by the relatively small camera sensor can show up as significant variance, as depicted here; my shape at my desk is hard to make out, swamped by the amplification of minor noise throughout the rest of the image:

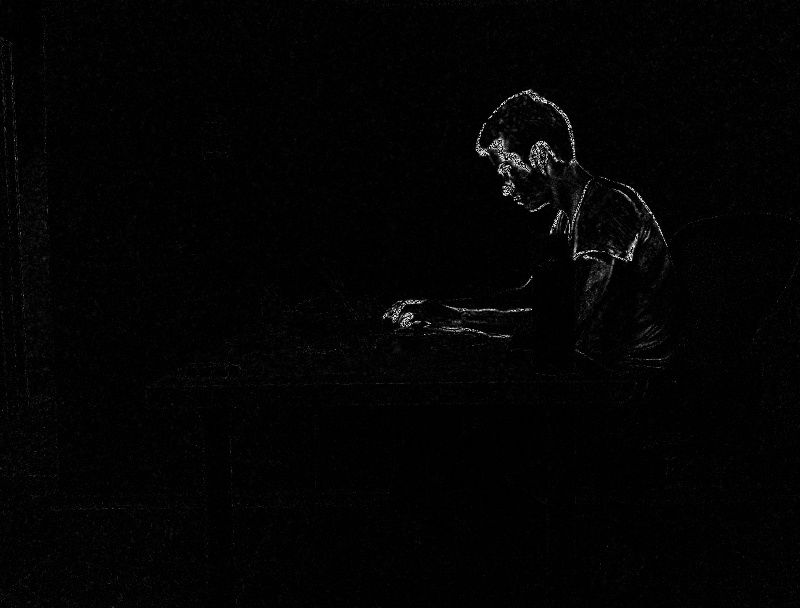

With better clipping and normalization (the pixels are originally represented by unsigned 8-bit ints, so if you try to do much with them in that format, you’re liable to lose a lot of information), the mass of change clarifies:

We can also produce an embossing effect, but while cute, this isn’t really what we want, since there’s still a lot going on besides my shape.

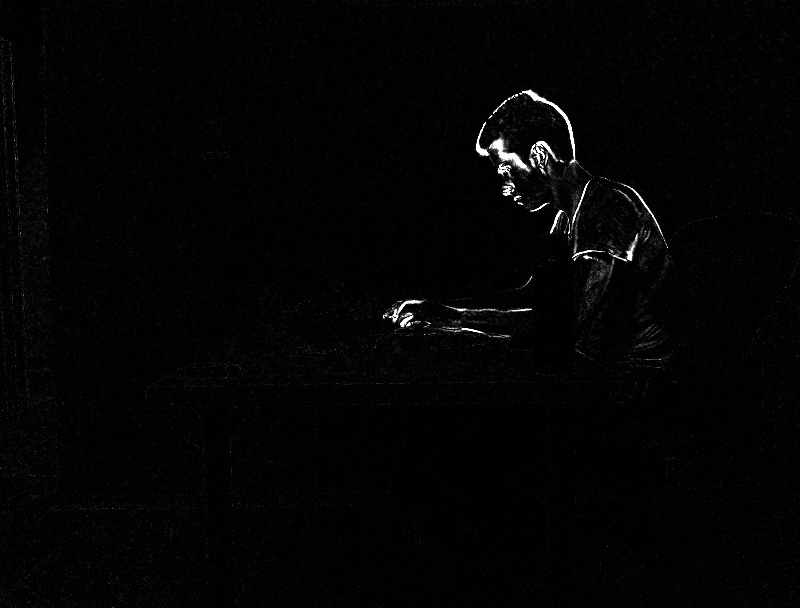

Squaring the difference produces an image with much better concentration – the greater the difference, the whiter the area: a quadratic, not linear, relation makes minor changes even less apparent, as we want them to be:

Again, taking care to smooth / normalize before the operation improves the final result:

This method is very sensitive; two images taken in close succession, trying to be as still as possible:

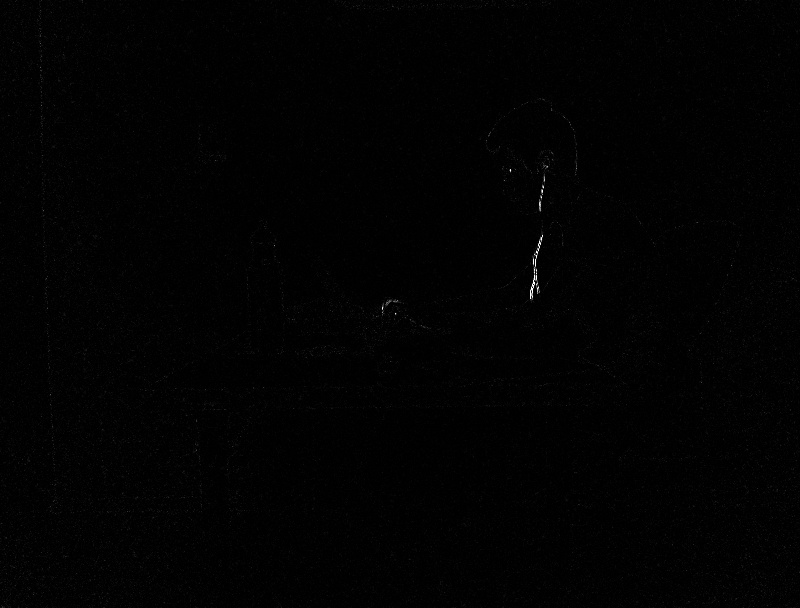

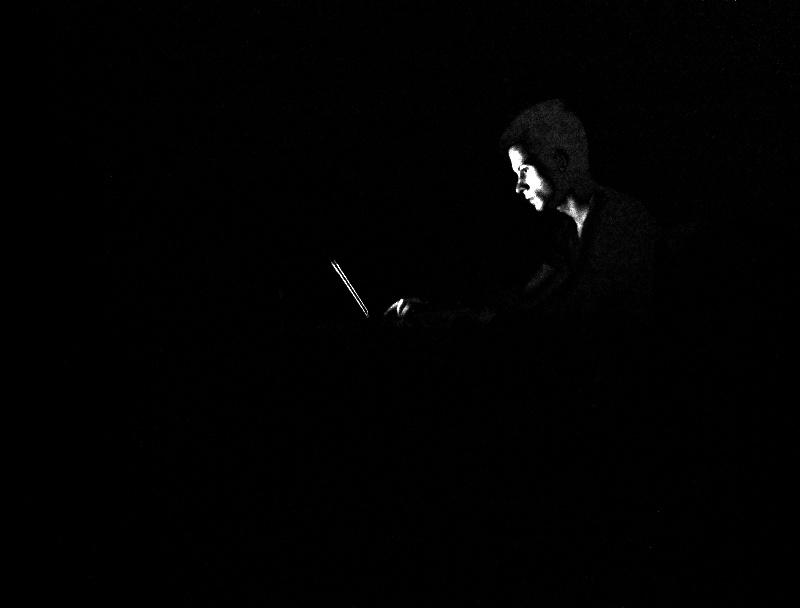

In low-light conditions, the diff becomes more of a direct photograph, a potential issue, since the classifier will be expecting a typical diff pattern (in the following, the room light is off, the laptop is the only source of light, and I am not in the first of the two images):

Best of all, some of the diffs can look quite ghastly: